Bayesian hyperparameter tuning is a smarter way to optimize machine learning models, saving time and computational power compared to grid or random searches. It uses probabilistic modeling to predict and test the most promising hyperparameter combinations. This method is widely used in industries like healthcare, finance, and e-commerce to improve model performance efficiently.

Here are the top tools for Bayesian optimization:

- Hyperopt: Flexible for complex search spaces but lacks active maintenance.

- Optuna: Modern, easy to use, and scales well for distributed computing.

- Scikit-Optimize: Simple and works seamlessly with scikit-learn.

- Ray Tune: Great for large-scale, distributed workloads.

- Microsoft NNI: Multi-framework support with strong automation features.

- Spearmint: Research-focused with built-in tracking.

- BOHB: Combines Bayesian optimization with HyperBand for resource efficiency.

- Auto-sklearn: Fully automated for scikit-learn users.

- H2O AutoML: Handles large datasets and integrates with big data frameworks.

Key Takeaways:

- Small teams: Start with simple tools like Scikit-Optimize or Hyperopt.

- Growing businesses: Optuna or Microsoft NNI offer scalability and flexibility.

- Enterprise-level projects: Ray Tune and H2O AutoML handle complex, large-scale tasks.

Bayesian optimization is an efficient way to boost model accuracy while reducing costs. Choosing the right tool depends on your project size, data complexity, and computational resources.

Bayesian Optimization for Efficient ML Hyperparameter Tuning

How Bayesian Hyperparameter Tuning Works

Bayesian optimization takes a smarter approach to hyperparameter tuning by using a probabilistic model to predict how different hyperparameter settings might affect a model's performance. Instead of randomly or systematically testing combinations, it leverages these predictions to guide the search process more efficiently.

This method strikes a balance between exploration - searching untested areas - and exploitation - focusing on settings that have already shown promise. This balance is what makes Bayesian optimization far more efficient than traditional methods like grid search or random search. Unlike Bayesian optimization, these traditional approaches test combinations without learning from previous outcomes.

One of the biggest advantages of Bayesian optimization is its ability to find optimal hyperparameters with far fewer evaluations, cutting down on computational costs and speeding up model training. The table below highlights how Bayesian optimization compares to other methods:

| Method | Efficiency | Complexity | Best for |

|---|---|---|---|

| Grid Search | Low | Simple | Small search spaces |

| Random Search | Medium | Simple | Medium search spaces |

| Bayesian Optimization | High | Complex | Large, complex search spaces |

Bayesian optimization has been widely adopted across industries like finance, healthcare, and e-commerce to solve complex, real-world problems. For instance, in healthcare, it helps refine the probability of a patient having a disease based on test results. In finance, it supports modeling market volatility and assessing investment risks.

Another powerful feature of Bayesian optimization is its ability to integrate prior knowledge into the tuning process. This makes models more resilient to irregularities in data and allows them to quickly adjust to new information - an essential capability in fast-moving industries.

From a technical standpoint, Bayesian optimization relies on surrogate models, such as Gaussian Process Regression or the Tree-structured Parzen Estimator (TPE), to approximate the objective function. It then uses a selection function, like Expected Improvement, to decide the next set of hyperparameters to evaluate. This approach minimizes the number of costly model evaluations needed.

What to Look for in Bayesian Optimization Tools

When it comes to selecting a Bayesian optimization tool, you need to focus on features that ensure smooth integration, scalability, and clear insights into optimization results. A well-chosen tool can streamline your workflows and make your model tuning process far more efficient.

Integration Support

One of the first things to check is whether the tool integrates easily with popular machine learning frameworks like TensorFlow, PyTorch, and scikit-learn. For example, scikit-optimize (skopt) is a great replacement for GridSearchCV, while Optuna and Ray Tune provide support for multiple APIs. This level of compatibility minimizes engineering headaches, allowing your team to optimize models without disrupting their usual workflows.

Scalability for Growing Datasets

As your business grows, so do your datasets. The tool you choose must handle large datasets and numerous hyperparameters efficiently. Tools like Hyperopt, GPyOpt, Ray Tune, and Optuna are known for their ability to scale, offering parallel and distributed computing to speed up the search process. This scalability ensures that your optimization efforts can keep pace with increasing computational demands.

Visualization and Experiment Tracking

Good visualization tools make it easier to track your optimization progress and interpret results. For instance, Optuna comes with a robust visualization suite and dashboard, which simplifies monitoring, comparing, and troubleshooting optimization runs. These features also foster collaboration, as teams can easily share and review results.

Automation Features

Automation can significantly cut down on both time and costs. Tools with features like automated early stopping and trial pruning are particularly valuable. For example, Optuna's pruning capabilities can reduce training time by up to four times. While this might occasionally lead to slightly less optimal results, the time savings are often worth the trade-off.

Flexible Search Space Handling

Your optimization tool should be able to handle a variety of parameter types, including real-valued, discrete, and conditional parameters. For instance, Hyperopt is well-suited for managing complex search spaces with hundreds of hyperparameters. This flexibility allows the tool to adapt to the specific needs of your models.

Usability and Documentation

Ease of use and quality documentation are crucial for quick adoption and efficient troubleshooting. Intuitive APIs, clear guides, and active community support make a big difference. Optuna, for example, is widely praised for its user-friendly design and helpful documentation.

| Feature | Why It Matters | Impact on Business |

|---|---|---|

| Integration Support | Seamless workflow adoption | Lower engineering costs, faster deployment |

| Scalability | Handles large workloads | Supports growth, manages expanding datasets |

| Visualization | Simplifies progress tracking | Better decisions, improved team collaboration |

| Automation | Reduces manual effort | Faster experiments, lower computational costs |

| Search Space Flexibility | Adapts to complex models | Broader use cases, handles sophisticated designs |

Reproducibility and Compliance

For businesses operating in regulated industries, reproducibility and thorough experiment logging are non-negotiable. The tool must log every run, configuration, and result to ensure compliance and enable reproducible research. This is also essential for knowledge sharing within teams and maintaining consistency across projects.

Tailoring Tools to Your Needs

Your choice of tool should align with your specific business needs. For example, companies with large-scale or cloud-based infrastructures might prioritize tools with distributed optimization capabilities. In contrast, smaller teams may focus on simplicity and easy-to-follow documentation. The increasing demand for distributed and parallel optimization highlights the growing complexity of machine learning in business.

Platforms like AI for Businesses can help small and medium-sized enterprises (SMEs) or startups find the right Bayesian optimization tools tailored to their operational goals and challenges.

1. Hyperopt

Hyperopt is among the pioneers in Bayesian hyperparameter optimization, offering a Python-based solution for both serial and parallel optimization tasks. Although its open-source version is no longer actively maintained, it remains a go-to tool for tackling intricate optimization problems.

"Hyperopt is a Python library for serial and parallel optimization over awkward search spaces, which may include real-valued, discrete, and conditional dimensions."

Hyperopt leverages Bayesian optimization algorithms, including those based on Gaussian processes and regression trees, to navigate parameter spaces that traditional grid search methods often miss. Let’s break down what makes Hyperopt a compelling choice for Bayesian hyperparameter tuning.

Support for Multiple Machine Learning Frameworks

Hyperopt integrates seamlessly with various machine learning frameworks, making it easier to optimize workflows. For instance, it works with scikit-learn pipelines through Hyperopt-Sklearn and supports distributed evaluation using Ray Tune. This integration simplifies both pipeline optimization and scaling efforts.

Scalability and Distributed Computing

One of Hyperopt's standout features is its ability to support distributed computing. By allowing simultaneous hyperparameter evaluations across multiple cores or machines, it significantly reduces optimization time for large-scale projects. It’s particularly effective at exploring non-linear and complex parameter spaces. Despite its lightweight nature compared to some modern alternatives, Hyperopt requires users to define four key components for optimization: the search space, the loss function, the optimization algorithm, and a database to track the history of scores and configurations.

Handling Complex and Conditional Search Spaces

Hyperopt shines when working with conditional and intricate search spaces that involve a mix of real-valued, discrete, and conditional dimensions. However, its manual configuration process may feel cumbersome compared to newer tools that offer more automation and user-friendly trial management. This manual setup, while giving users greater control, can be a double-edged sword depending on the project’s complexity.

Things to Keep in Mind

Before adopting Hyperopt, it’s worth considering its limitations alongside its strengths. Databricks now recommends Optuna for single-node optimization or Ray Tune for distributed hyperparameter tuning as alternatives to the open-source version of Hyperopt. While Hyperopt is still a solid choice for projects requiring flexible handling of complex search spaces, its lack of active maintenance might steer users toward newer tools with better support, easier configurations, and more comprehensive documentation. Though it offers unique advantages, modern solutions often deliver a more streamlined and user-friendly experience.

2. Optuna

Optuna is a cutting-edge, framework-independent library designed for hyperparameter optimization. Known for its modern approach, it simplifies Bayesian optimization and automates complex processes, making it a favorite among machine learning practitioners.

Support for Various Machine Learning Frameworks

Optuna seamlessly integrates with popular machine learning frameworks like TensorFlow, Keras, Scikit-Learn, LightGBM, PyTorch, and XGBoost. Its framework-specific callbacks ensure smooth operation, fitting neatly into existing workflows. To begin using Optuna, you simply wrap your model training logic inside an objective function, use the trial object to suggest hyperparameter values, and create a study to manage the optimization process.

The library supports Python 3.8 and newer versions. Additionally, OptunaHub provides a platform where users can share and access optimization components, making it easier to explore and implement advanced features.

Scalability and Distributed Computing Capabilities

Optuna shines when it comes to scalability. It integrates with tools like Ray and Dask to handle distributed optimization across large compute clusters, enabling faster processing of complex tasks.

A March 2025 study by Loïc Cudennec highlighted Optuna's scalability, showing its ability to process 1.5 million suggestions and 5 million evaluations on a 1,024-core system. Using the OptunaP2P extension, the computation speed tripled, demonstrating its efficiency in large-scale scenarios.

For teams deploying models in cloud environments, Optuna offers integration with MLflow through classes like MlflowStorage and MlflowSparkStudy. These tools enable parallel studies using PySpark executors, a feature especially useful for organizations already leveraging MLflow for tracking experiments and managing models.

Automation Features Like Early Stopping and Conditional Search Space Handling

One of Optuna's standout features is its ability to automate complex tasks, saving users from tedious manual configurations. It handles conditional search spaces and includes built-in pruning mechanisms to terminate unpromising trials early, conserving time and resources.

The library's trial management system dynamically adjusts its suggestions based on previous results, which is particularly helpful for tasks like neural architecture search. This is crucial in scenarios where parameter relationships are not immediately clear, streamlining the optimization process in intricate machine learning pipelines.

Unlike tools that demand heavy upfront configuration, Optuna strikes a balance between automation and customization. Its ease of use appeals to beginners, while its advanced features cater to experienced users tackling challenging optimization problems. These capabilities firmly establish Optuna as a standout tool for Bayesian optimization.

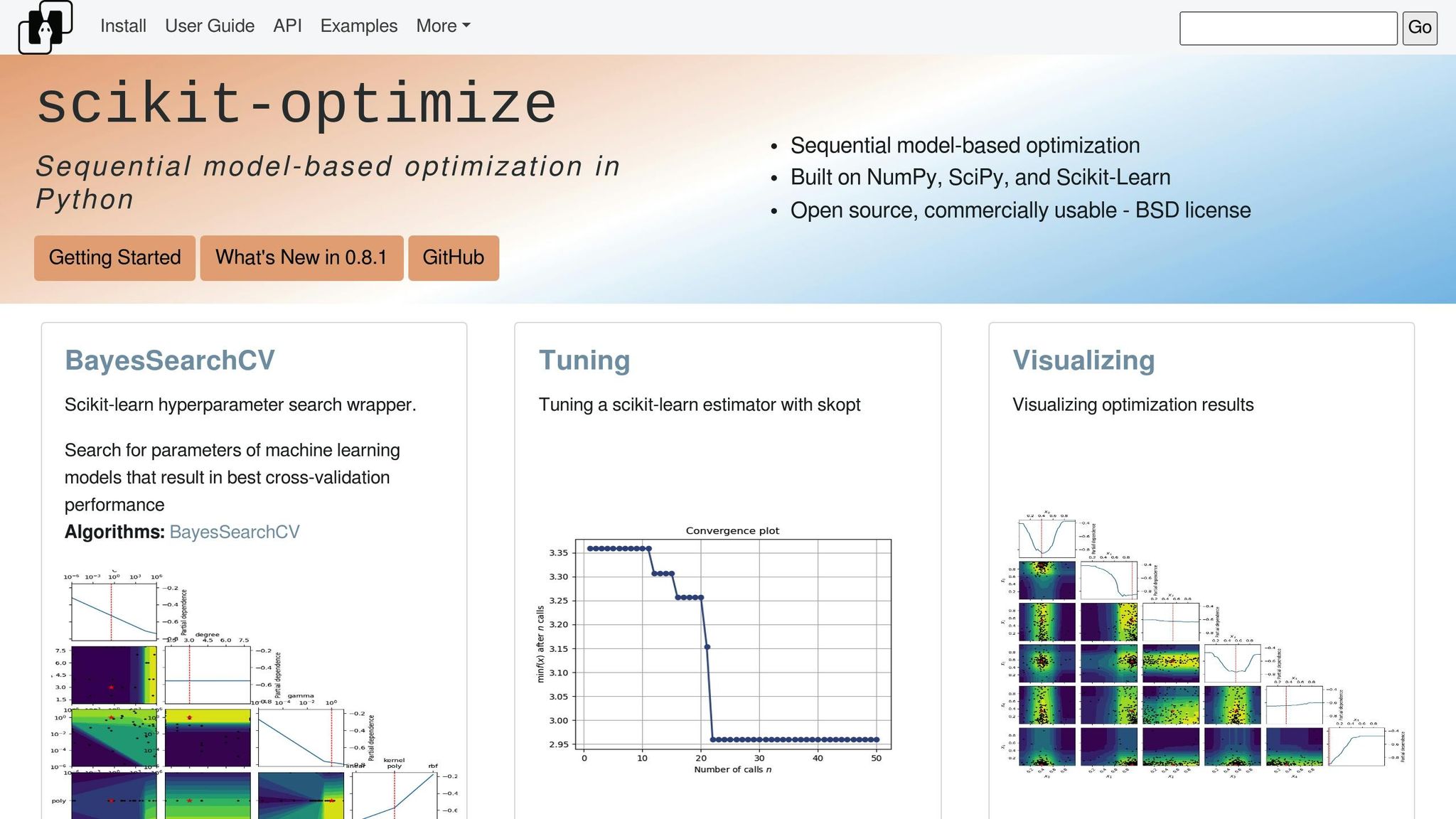

3. Scikit-Optimize (skopt)

Scikit-Optimize simplifies hyperparameter tuning while maintaining strong performance, making it a practical choice for teams aiming to strike a balance between ease of use and effectiveness. Built on NumPy, SciPy, and Scikit-Learn, this Bayesian optimization library focuses on accessibility without compromising functionality.

Support for Various Machine Learning Frameworks

One of Scikit-Optimize's standout features is its seamless integration with Scikit-Learn, making it a natural fit for tuning hyperparameters of machine learning models within this widely-used framework. Its design prioritizes usability, ensuring it can be applied across a variety of contexts.

For those transitioning from traditional methods like GridSearchCV, Scikit-Optimize serves as a convenient drop-in replacement, offering Bayesian optimization as an alternative. It supports diverse models with customizable search spaces and varying evaluation counts, giving users flexibility and control.

In addition to its integration capabilities, Scikit-Optimize provides tools to monitor optimization progress, helping users stay informed at every step.

Visualization of Optimization Progress and Results

One area where Scikit-Optimize truly shines is its visualization capabilities, which transform complex optimization data into clear, actionable insights. The library's skopt.plots module includes functions designed to make sense of the optimization process.

For instance, the plots.plot_evaluations function generates a grid of visualizations, mapping how sample evaluations evolve during optimization. This grid, sized n_dims by n_dims, includes diagonal histograms for each parameter and scatter plots on the lower triangle to show the relationships between evaluated points. A color-coded scheme - ranging from darker purple for earlier samples to lighter yellow for recent ones - helps track the progression of the search strategy.

Other visualization tools include:

plots.plot_objective: Displays the partial dependence of the objective function, as modeled by the surrogate.plots.plot_convergence: Tracks optimization progress by highlighting the best results achieved at each iteration.

These tools provide insights into parameter interactions, convergence trends, and areas of the search space needing further exploration. They can also help identify parameters with little impact on performance, allowing you to streamline future searches. All visualizations are compatible with the OptimizeResult instance returned by skopt's minimizers, ensuring a smooth and intuitive workflow.

4. GPyOpt

GPyOpt is an open-source Python library designed for Bayesian optimization. It was developed by the Machine Learning group at the University of Sheffield and is built on GPy, a Python framework for Gaussian process modeling. However, the core team has since shifted focus, and the repository was officially archived on February 23, 2023. While the library is still functional, it’s important to note that no new updates or active maintenance are planned.

5. Ray Tune

Ray Tune is a distributed library designed for hyperparameter tuning, built on top of Ray. It can run experiments on a single machine or scale them across multiple nodes in a cluster, making it an efficient tool for Bayesian optimization.

Support for Multiple Machine Learning Frameworks

Ray Tune works seamlessly with popular frameworks like PyTorch, XGBoost, TensorFlow, and Keras. It also integrates with optimization libraries such as Ax, BayesOpt, BOHB, Nevergrad, and Optuna. To make adoption easier, the library offers detailed examples and tutorials, including support for MLflow. This versatility allows Ray Tune to adapt to various machine learning workflows.

Scalability and Distributed Computing

One of Ray Tune's standout features is its ability to scale. Whether you're working on a single workstation or a large cluster, Ray Tune accelerates Bayesian optimization by running parallel evaluations, significantly reducing the time required for experimentation.

Tools for Visualizing Optimization Progress

Ray Tune doesn’t just focus on performance - it also provides tools to help you monitor and understand your experiments. It includes built-in visualization capabilities for tracking optimization progress and integrates directly with TensorBoard. This makes it easy to analyze results at both the experiment and trial levels. For example, you can use matplotlib to plot metrics from your trials:

import matplotlib.pyplot as plt

for i, result in enumerate(result_grid):

plt.plot(

result.metrics_dataframe["training_iteration"],

result.metrics_dataframe["mean_accuracy"],

label=f"Trial {i}",

)

plt.xlabel("Training Iterations")

plt.ylabel("Mean Accuracy")

plt.legend()

plt.show()

In one notable case, researchers used Ray Tune to optimize a DCGAN for generating images from datasets like MNIST, STL10, and CelebA. They tuned hyperparameters such as learning rate, beta1, beta2, batch size, and epochs. By integrating with Weights & Biases, they tracked metrics and visualized the generated images, offering a comprehensive view of the optimization process.

sbb-itb-bec6a7e

6. Microsoft NNI

Microsoft's Neural Network Intelligence (NNI) is a free and open-source AutoML toolkit designed to simplify the process of finding the best neural architectures and hyperparameters. Developed by Microsoft, NNI automates tasks like feature engineering, model compression, and neural architecture search, making it an efficient tool for optimizing machine learning models.

Compatibility with Multiple Machine Learning Frameworks

NNI works seamlessly with a variety of machine learning frameworks. For deep learning, it supports PyTorch, TensorFlow, Keras, Theano, and Caffe2. It also integrates with traditional libraries like Scikit-learn, XGBoost, CatBoost, and LightGBM. Additionally, it offers a range of Bayesian optimization algorithms, including TPE, GP Tuner, Metis Tuner, and BOHB, to enhance the tuning process.

Scalability and Distributed Computing

NNI is built to scale, whether you're running experiments on a local machine, remote servers, or cloud platforms like Azure Kubernetes Service (AKS). It supports over nine training services, making it adaptable to various infrastructure needs. From small-scale tests on local machines to large-scale deployments on platforms like Azure Machine Learning, Kubeflow, AdaptDL, or OpenPAI, NNI is designed to handle projects of all sizes. This scalability makes it a powerful option for businesses aiming to streamline AI model optimization.

7. Spearmint

Spearmint is a software package tailored for Bayesian optimization. Its purpose? To fine-tune parameters and minimize objective functions with as few experimental runs as possible. This makes it a practical tool for streamlining machine learning workflows.

What sets Spearmint apart is its modular design. Users can mix and match different "driver" and "chooser" modules based on their specific needs. The chooser modules handle acquisition functions like expected improvement, UCB, or random selection, while the driver modules oversee the distribution and execution of experiments.

Scalability and Distributed Computing

Spearmint is built to handle everything from simple setups on a single machine to more complex, distributed workloads using cluster deployments and grid schedulers. Its ability to execute experiments in parallel significantly speeds up the process of finding optimal hyperparameters.

Tracking and Visualizing Optimization Progress

One of Spearmint's standout features is its built-in tracking and visualization system. When launched with the -w flag, it starts a local web server that displays a status page with real-time information about optimization runs. It also generates several output files to help users monitor progress, such as:

- A

trace.csvfile that logs the complete history of experiments and tracks the best results over time. - Individual text files for each job, stored in the output directory.

- A

best_job_and_result.txtfile, updated at every iteration, which highlights the best result observed along with its job ID and parameter details.

Spearmint operates on Python and requires dependencies like NumPy, SciPy, and pymongo. These requirements make it a convenient choice for most data science teams already working with Python-based machine learning tools.

8. BOHB (Bayesian Optimization with HyperBand)

BOHB (Bayesian Optimization with HyperBand) combines the precision of Bayesian optimization with the resource-saving efficiency of HyperBand. This hybrid approach allows it to quickly identify the best hyperparameter configurations, even for models that demand significant computational power.

Scalability and Distributed Computing Capabilities

BOHB is designed to handle the growing data demands of deep learning. By utilizing both model parallelism and data parallelism, it can efficiently process large datasets and manage computational resources in distributed environments. This makes it a valuable tool across industries like finance, healthcare, autonomous systems, and natural language processing.

Automation Features Like Early Stopping

One of BOHB's standout features is its use of HyperBand for early stopping. This feature speeds up the optimization process significantly. For example, research shows that early stopping with BOHB can boost convergence rates by over 200% and allow exploration of up to 167% more configurations within the same time frame. By dynamically halting trials that show little promise, BOHB allocates resources more efficiently than traditional methods like random or grid search, making it especially useful in scenarios with limited computational resources.

9. Auto-sklearn

Auto-sklearn is a standout tool in the world of Bayesian optimization, offering a fully automated approach to simplify model tuning from start to finish.

Built on top of scikit-learn, Auto-sklearn serves as an AutoML toolkit that leverages Sequential Model-Based Optimization (SMBO) to explore model configurations and hyperparameters efficiently.

This tool has earned its reputation, winning the ChaLearn AutoML challenge. In benchmark studies, it placed in the top three for over 70% of datasets tested in the OpenML AutoML Benchmark, consistently surpassing manual hyperparameter tuning methods.

Compatibility with Scikit-learn

Auto-sklearn integrates directly with scikit-learn, giving users access to its full suite of estimators, preprocessors, and pipelines. This compatibility makes it suitable for both classification and regression tasks.

Key Automation Features: Early Stopping and Conditional Search

What sets Auto-sklearn apart is its ability to automate the entire machine learning pipeline. From data preprocessing and model selection to hyperparameter tuning, it handles everything. Features like intelligent early stopping and adaptive conditional search ensure efficient optimization. Additionally, it combines the top-performing models into an ensemble to boost predictive accuracy.

For instance, imagine a business trying to predict customer churn. By feeding historical data into Auto-sklearn, the tool automatically identifies the best model and fine-tunes its hyperparameters using Bayesian optimization. This saves time and effort, allowing the company to quickly deploy an effective predictive model - helping improve customer retention and revenue.

While Auto-sklearn is free and user-friendly, it does have limitations. It’s designed for single-machine use, which can make it less suitable for handling very large datasets. However, its automation and ease of use make it a go-to choice for many looking to streamline hyperparameter tuning with Bayesian optimization.

10. H2O AutoML

H2O AutoML stands out as a robust, open-source tool designed to streamline the entire machine learning workflow. From training to tuning, it automates the process of optimizing multiple models within a time frame specified by the user. By repeatedly adjusting hyperparameters, it identifies the best-performing algorithm for your dataset. With over 18,000 organizations relying on its automation capabilities, H2O AutoML has become a trusted name in the field.

Support for a Range of Machine Learning Frameworks

H2O AutoML is compatible with a variety of algorithms and integrates seamlessly with big data frameworks. It supports algorithms like DRF, GLM, XGBoost, GBM, DeepLearning, and StackedEnsemble. Additionally, through Sparkling Water, it connects with Hadoop, Spark, and related ecosystems, combining H2O's machine learning tools with Spark's data processing power. This integration enhances Spark's capabilities, making it a versatile choice for data scientists working with large-scale data.

Built for Scalability and Distributed Computing

H2O AutoML is designed to handle large datasets efficiently. Its architecture uses parallelized in-memory processing to speed up machine learning tasks. Whether running on bare metal or integrated with Hadoop, Spark, or Kubernetes clusters, H2O AutoML can ingest data directly from sources like HDFS, Spark, S3, or Azure Data Lake into its in-memory distributed key-value store.

As one business leader put it: "With H2O we are able to build many models in a much shorter period of time". Beyond its scalability, the platform also includes tools for tracking experiments and visualizing results, making it easier to manage complex workflows.

Interactive Visualization and Model Insights

H2O AutoML offers a modern web interface powered by H2O Wave, providing interactive visualizations through the H2O Model Explainability suite. This user-friendly interface allows users to upload data, run AutoML experiments, and explore results visually. With just one function call, users can access detailed model explainability.

For those who need more granular insights, the platform provides access to meta information through the event_log and training_info features, enabling data scientists to make well-informed decisions based on model performance. This level of transparency ensures users have a clear understanding of their models' behavior.

Tool Comparison Table

Below is a quick comparison of key features to help you pick the right Bayesian optimization tool for your business needs.

| Tool | Algorithms | Frameworks | Distributed Computing | Visualization | Pricing | Business Suitability |

|---|---|---|---|---|---|---|

| Hyperopt | Random Search, Tree of Parzen Estimators, Adaptive TPE | Scikit-learn, Keras, Theano | Limited | Basic plotting | Free | Small to medium businesses, beginners |

| Optuna | GridSearch, Random Search, Bayesian, Evolutionary | PyTorch, TensorFlow, Keras, MXNet, Scikit-Learn, LightGBM | Yes, with distributed trials | Interactive dashboard | Free | All business sizes, framework-agnostic needs |

| Scikit-Optimize | Sequential model-based optimization (BHO) | Scikit-learn native | Limited | Matplotlib integration | Free | Small businesses, scikit-learn users |

| GPyOpt | Gaussian Process optimization | Custom integration required | No | Basic plotting | Free | Research-focused, academic use |

| Ray Tune | Integrates with Ax/Botorch, HyperOpt, Bayesian Optimization | PyTorch, TensorFlow, XGBoost, LightGBM, Scikit-Learn, Keras | Yes, built-in scaling | TensorBoard integration | Free | Large-scale projects, enterprise |

| Microsoft NNI | TPE, Random Search, GP Tuner, Metis Tuner, BOHB | PyTorch, TensorFlow, Keras, Theano, Caffe2, Scikit-learn, XGBoost | Yes, multi-platform | Web UI with experiment tracking | Free | Enterprise, multi-framework environments |

| Spearmint | Gaussian Process Bandits | Custom integration | No | Minimal | Free | Academic research, specialized use |

| BOHB | Bayesian Optimization with HyperBand | Custom integration required | Yes, with Dask | Basic reporting | Free | Resource-constrained environments |

| Auto-sklearn | Bayesian optimization for AutoML | Scikit-learn exclusive | Limited | Model performance plots | Free | Automated ML workflows, beginners |

| H2O AutoML | DRF, GLM, XGBoost, GBM, DeepLearning, StackedEnsemble | Hadoop, Spark ecosystems via Sparkling Water | Yes, in-memory distributed processing | H2O Wave web interface, Model Explainability suite | Free | Enterprise, big data environments |

Key Considerations for Choosing a Tool

- Startups: Free, open-source tools like Hyperopt, Optuna, or Scikit-Optimize are ideal for keeping costs low.

- Growing Businesses: Tools like Optuna and Microsoft NNI offer flexibility across multiple frameworks, making them a great choice for scaling operations.

- Enterprise-Level Projects: Ray Tune and H2O AutoML are perfect for large-scale environments. Ray Tune handles distributed workloads well, while H2O AutoML excels in automation and big data integration.

- Beginners and Simpler Workflows: Auto-sklearn and Scikit-Optimize provide straightforward interfaces with seamless integration into familiar workflows.

Features That Stand Out

- Distributed Computing: Tools like Ray Tune, Microsoft NNI, and H2O AutoML significantly cut down training times for large datasets with parallel processing.

- Advanced Monitoring: H2O AutoML's Model Explainability suite and Microsoft NNI's web interface offer detailed experiment tracking, making it easier to manage multiple trials and present results to stakeholders.

This table highlights the strengths of each tool, helping you align your choice with your specific business needs and technical requirements.

Conclusion

Choosing the right Bayesian optimization tool can significantly impact the success of your machine learning projects. Hyperparameter tuning alone has been shown to boost model accuracy by up to 30%, and 85% of successful data science projects attribute their achievements to teams that deeply understand parameter selection and its effects.

For startups and small businesses, free and easy-to-use tools like Hyperopt or Scikit-Optimize offer a cost-effective way to achieve reliable results. Companies experiencing growth may find tools like Optuna and Microsoft NNI better suited to their needs, thanks to their flexibility and ability to scale with increasing demands. Meanwhile, enterprises managing large-scale datasets can take advantage of the distributed computing capabilities of tools like Ray Tune or H2O AutoML, which are designed to handle complex models efficiently. Matching the right tool to your business needs ensures both optimal performance and efficient use of resources.

Bayesian optimization stands out by leveraging probabilistic models to guide the search process, offering better performance and resource efficiency compared to traditional search methods . Whether you choose Hyperopt, H2O AutoML, or another tool, these solutions highlight the power of Bayesian techniques in building high-performing machine learning models.

The tools discussed here represent some of the top options for Bayesian hyperparameter tuning, each with unique advantages. By optimizing hyperparameters effectively, you can achieve higher model accuracy, reduce computational overhead, and create robust AI-driven solutions.

If you're looking to expand your use of AI beyond hyperparameter tuning, consider exploring additional tools tailored to your business needs. Platforms like AI for Businesses provide a curated selection of AI solutions designed to help small and growing companies streamline operations and unlock new opportunities.

FAQs

Why is Bayesian hyperparameter tuning better than grid or random search?

Bayesian hyperparameter tuning offers a smarter alternative to traditional methods like grid or random search. Unlike grid search, which tests every possible combination, or random search, which samples parameters without direction, Bayesian optimization takes a more strategic approach. It uses past results to predict and target the most promising areas in the hyperparameter space. The result? Faster convergence and improved performance, especially when dealing with complex models.

This method is particularly useful for businesses tackling resource-intensive machine learning tasks. By reducing the number of iterations and computational demands, Bayesian tuning not only delivers optimal results but also saves significant time and costs. It’s a more efficient way to fine-tune models without draining resources.

What should small businesses consider when choosing a Bayesian optimization tool for hyperparameter tuning?

Small businesses should prioritize tools that are simple to set up, provide efficient performance, and enable a straightforward way to define goals. These qualities are especially important for teams working with tighter budgets or minimal technical support.

It's also smart to choose tools that can explore hyperparameters more efficiently, needing fewer attempts than traditional approaches like grid or random search. This not only saves time but also cuts down on computing expenses - perfect for businesses looking to improve operations without straining their resources.

What should you consider when using Bayesian optimization tools with your machine learning framework?

When incorporating Bayesian optimization tools into your machine learning framework, it's crucial to ensure the tool aligns well with your framework's architecture and can manage the hyperparameter search space you've set up. Smooth data exchange between the optimizer and your training pipeline is essential to keep things running efficiently.

You’ll also want to pay attention to how you allocate computational resources, as Bayesian optimization relies on an iterative process that can be resource-intensive. To maintain consistency and trust in your results, adopt practices like fixing random seeds and logging configurations. These steps go a long way in ensuring reproducibility and reliability throughout your workflow.