Employers are increasingly using AI to analyze employees' emotions through sentiment tracking, raising questions about privacy. These tools monitor digital communications, video cues, and even physical behaviors to gauge emotional states. While companies argue this helps improve productivity, engagement, and mental health support, it also sparks concerns about misuse, stress, and emotional privacy violations.

Key points:

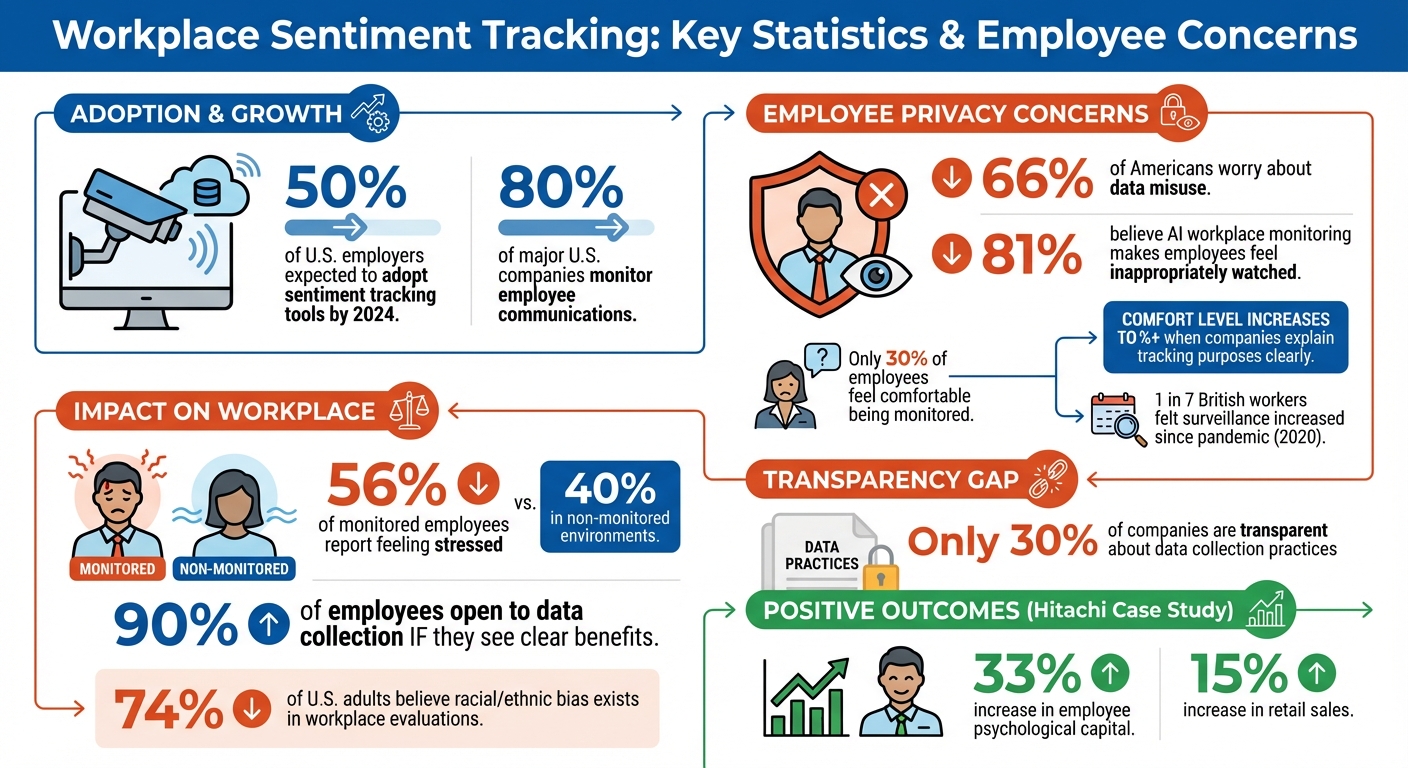

- Growth in Use: By 2024, half of U.S. employers were expected to adopt sentiment tracking tools.

- Privacy Concerns: 66% of Americans worry about data misuse, and only 30% of employees feel comfortable being monitored.

- Legal Challenges: U.S. laws like California's Consumer Privacy Act aim to protect employee data, but federal regulations remain limited.

- Ethical Issues: AI systems often misinterpret emotions, especially for neurodivergent individuals or those from diverse backgrounds.

Balancing insights with respect for privacy requires transparency, employee consent, and limiting data collection to relevant work activities.

Workplace Sentiment Tracking Statistics: Employee Privacy Concerns and Adoption Rates

AI-Enabled Employee Sentiment Analysis | What kind of technology is that and what it means?

How Sentiment Tracking Affects Workplace Dynamics

As companies turn to sentiment tracking, its role in shaping workplace dynamics highlights a fine line between gaining insights and respecting privacy. This technology goes beyond gathering data - it transforms workplace interactions at their core. Modern sentiment trackers analyze everything from daily routines to speech patterns and conversational tones. For instance, tools like Isaak evaluate emails and file access to identify key contributors and map collaboration networks, while systems such as Humanyze use sociometric badges to monitor the length and tone of casual conversations.

These tools provide managers with valuable insights, paving the way for better employee engagement and more informed decision-making.

Increasing Employee Engagement

Real-time sentiment analysis helps spot potential issues early. By analyzing communication tone and volume, these tools can flag signs of burnout or meeting overload. When framed as constructive feedback, this data empowers employees to enhance their performance.

"When artificial intelligence and other advanced technologies are implemented for developmental purposes, people like that they can learn from it and improve their performance." – Emily Zitek, Associate Professor of Organizational Behavior, Cornell University

The way this data is handled makes all the difference. Between 2015 and 2020, Hitachi introduced a program using wearables and a mobile app to monitor employee happiness levels. Instead of using the data for surveillance, the company focused on offering suggestions to boost well-being. As a result, workers reported a 33% increase in psychological capital, and retail sales rose by 15%. Employees viewed the initiative as supportive rather than intrusive, which contributed to its success.

Building Better Team Collaboration

Sentiment tracking also enhances team dynamics. By monitoring communication patterns, AI systems can pinpoint informal leaders and identify bottlenecks. This helps managers understand not just what gets done but how teams function together.

However, constant monitoring can backfire. Studies show that 56% of employees under surveillance report feeling stressed, compared to 40% in non-monitored environments. Additionally, real-time AI feedback can sometimes stifle creativity during collaborative tasks . When workers feel micromanaged, they often disengage, doing only the bare minimum instead of going the extra mile.

"When monitoring is used as an invasive way of micromanaging, it violates the unspoken agreement of mutual respect between a worker and their employer." – Tara Behrend, Professor of Human Resources and Labor Relations, Michigan State University

Ultimately, the line between helpful insights and intrusive surveillance comes down to transparency and intent. Tools designed for coaching and development are generally well-received, while those focused on automatic performance evaluation can feel oppressive. A Cornell study found that over 30% of participants criticized AI surveillance for being stressful and limiting creativity, compared to just 7% who felt similarly about human monitoring.

Privacy Concerns with Sentiment Tracking

The rapid rise of sentiment tracking technology has sparked serious concerns about employee privacy, particularly as its implementation often outpaces the creation of clear guidelines for handling sensitive emotional data.

Employee Consent and Transparency

One of the biggest challenges with sentiment tracking is ensuring genuine employee consent. The inherent power imbalance between employers and employees makes it hard for workers to freely opt out. Many feel pressured to agree, fearing potential job-related consequences. While some sentiment tools focus on performance coaching, those that delve into emotional data require extra care to ensure consent is authentic and informed.

Interestingly, while only 30% of employees feel comfortable with employers monitoring their communications, that number rises to over 50% when companies clearly explain what they’re tracking and why. Transparency, it seems, is key.

Clear documentation is a starting point. Employee handbooks should detail exactly what data is being collected, how it will be used, and who will have access to it. Equally critical is allowing employees to review their own data and dispute any inaccuracies. Steve Hatfield, Deloitte’s Global Future of Work Leader, emphasizes:

"Any type of employee data collection should be done with the consent of employees and in a transparent way."

Concerns about misuse are widespread. 81% of Americans believe AI-driven workplace monitoring will make employees feel inappropriately watched, and 66% worry that collected data will be misused. These fears are valid. Researchers warn that emotion AI encroaches on "emotional privacy" - the right to control access to one’s internal feelings and emotional states.

These transparency issues naturally lead to broader discussions about data security and the dangers of misuse.

Data Security and Misuse Risks

Emotional data introduces serious security vulnerabilities. Breaches could expose sensitive psychological information, while internal misuse could involve manipulating behavior, justifying dismissals, or enforcing unrealistic emotional standards. This adds to the stress already associated with constant surveillance.

Emotion AI systems are far from perfect. They often rely on flawed algorithms that misinterpret facial expressions or speech patterns, leading to biased outcomes, particularly for neurodivergent individuals or those from diverse cultural backgrounds. When monitoring extends to personal devices, the risks grow even more troubling. Employers may inadvertently track employees’ moods or activities outside of work. A 2020 survey revealed that 1 in 7 British workers felt their workplace surveillance had increased since the pandemic began.

The psychological impact of such monitoring is real. Studies link perceptions of surveillance to greater psychological distress and lower job satisfaction, a phenomenon referred to as "stress proliferation". Additionally, employees often engage in "emotional labor", suppressing or faking emotions to meet AI-driven expectations, which can lead to burnout.

Finding the Right Balance Between Monitoring and Privacy

To mitigate these risks, companies need to strike a careful balance that respects both employee privacy and organizational goals. One approach is to anonymize and aggregate data before sharing it with leadership. Insights should focus on team trends rather than singling out individuals. Additionally, companies should establish clear retention policies, deleting raw sentiment data within 30 to 90 days while keeping only aggregated trends.

Monitoring should be limited to company-owned devices and work-specific platforms like email or Slack. Under no circumstances should tracking software be installed on personal devices such as employees’ phones or laptops. Automated alerts for significant issues can reduce the need for constant manual monitoring, minimizing unnecessary exposure to raw employee data.

It’s also vital to use sentiment insights as a tool to inform decisions - not to dictate them. Managers should have the final say, ensuring a human element remains in the decision-making process. As Kat Roemmich, a researcher at the University of Michigan, explains:

"Emotion AI may function to enforce workers' compliance with emotional labor expectations, and that workers may engage in emotional labor as a mechanism to preserve privacy over their emotions."

Ultimately, the goal should be to create shared benefits. Data collection should serve employees by helping with personalized coaching or preventing burnout - not just boosting the company’s bottom line.

sbb-itb-bec6a7e

Legal and Ethical Considerations

Following Privacy Laws

Workplace sentiment tracking operates under a maze of legal frameworks, with oversight divided among federal agencies and varying state laws. Agencies like the Equal Employment Opportunity Commission (EEOC), the National Labor Relations Board (NLRB), and the Department of Labor's Occupational Safety and Health Administration (OSHA) handle cases involving digital surveillance and sentiment tracking. However, no single federal law directly regulates emotion monitoring in the workplace.

California leads the charge in granting workers data rights through the Consumer Privacy Act (CCPA), which allows employees to access and request the deletion of emotional data collected by their employers. Sentiment tracking often involves biometric data - like voiceprints and facial geometry - triggering extra safeguards under laws like Illinois's Biometric Information Privacy Act (BIPA). Similar protections exist in Texas and Washington, where explicit consent is required before employers can collect such data.

The legal landscape shifted significantly in 2025. That year, hundreds of workplace technology bills were introduced. Colorado's AI Act now mandates transparency reviews and impact assessments for AI systems used in employment decisions. Similarly, California's No Robo Bosses Act and Massachusetts's FAIR Act introduced measures to ensure accountability. States like New York, Connecticut, and Delaware now require employers to give advance notice before engaging in electronic monitoring. Meanwhile, laws in Texas, Maryland, and Nebraska have introduced a human-in-the-loop requirement, ensuring that critical employment decisions involving algorithms include human oversight to avoid fully automated outcomes like terminations or discipline.

These intricate legal frameworks underscore the ethical challenges of emotion monitoring in professional settings.

Ethical Challenges in Emotion Monitoring

Legal compliance is just one piece of the puzzle - emotion monitoring raises serious ethical concerns. The idea of "emotional privacy" - the right to keep one's inner feelings private - lacks legal definition in the U.S., but many experts argue it deserves the same level of protection as medical or financial data.

One major concern is the reliability of these systems. Research suggests that AI struggles to accurately interpret internal emotions based on external cues like facial expressions or vocal tone. This is especially concerning for neurodivergent individuals or those from diverse backgrounds, where emotional expressions might differ significantly. For context, 74% of U.S. adults believe racial and ethnic bias is an issue in workplace evaluations, and emotion AI risks amplifying those biases rather than addressing them.

The power imbalance between employers and employees further complicates matters. Workers often feel they can't refuse consent to such monitoring without jeopardizing their jobs. A review by the U.S. Government Accountability Office (GAO) of 217 public comments highlighted widespread concerns about employer surveillance extending into personal spaces, such as monitoring through personal devices at home. The GAO noted:

"Digital surveillance by employers may create a sense of distrust among workers, making them feel like they are constantly being watched, and leading to a decline in worker productivity and morale."

When organizations fail to mitigate these harms, some researchers advocate for "critical refusal" - opting not to implement emotion-monitoring technologies at all. Ethical guidelines suggest treating emotional data with extreme care, explicitly banning its use for punitive measures like terminations or disciplinary actions. Regular bias audits, transparent data logs, and meaningful opt-out options can help, but they don't fully resolve the tension between monitoring emotions and respecting individual dignity. Protecting emotional privacy is as essential as legal compliance when it comes to fostering trust and maintaining a respectful workplace.

How AI Tools Help SMEs Implement Sentiment Tracking Responsibly

AI Tools for Sentiment Analysis

Small and medium-sized enterprises (SMEs) now have access to AI tools that make sentiment tracking more accessible while respecting privacy. Platforms like Writesonic and Stability.ai can seamlessly integrate into existing communication systems, analyzing emails, chats, and survey responses to gauge employee sentiment efficiently.

However, responsible implementation is key. Research shows that while 80% of major U.S. companies monitor employee communications, only 30% are transparent about what data they collect. This highlights a crucial gap - SMEs need to prioritize early and clear communication about their practices. When handled thoughtfully, sentiment analysis can uncover burnout risks and enhance workplace well-being without crossing into invasive territory.

These tools create opportunities to gain insights while maintaining a strong focus on privacy.

Key Features to Look for in AI Sentiment Tools

When selecting an AI tool for sentiment tracking, certain features are critical for balancing insight with employee privacy:

- Data Anonymization: Tools should automatically strip identifying details - like names, employee IDs, and department information - and replace them with anonymous tags. This allows businesses to assess overall team sentiment without singling out individuals.

- Advanced Security Measures: Look for features such as end-to-end encryption, automated data deletion, and detailed access controls. These ensure sensitive information is protected and only accessible to authorized personnel. Audit trails can further enhance accountability by documenting who accesses the data.

- Opt-in Consent and Aggregated Reporting: Privacy-focused tools often include opt-in consent mechanisms, which are crucial for building trust. Studies reveal that 90% of employees are open to data collection if there's a clear benefit. Additionally, tools that provide aggregated reports at the team or department level - rather than individual emotional profiles - help preserve personal privacy. When employers clearly explain the purpose of monitoring, employee comfort levels can jump from 30% to over 50%.

By focusing on these features, SMEs can use sentiment tracking tools to improve workplace dynamics without compromising employee trust.

How AI for Businesses Can Help

Finding the right sentiment tracking tool that balances privacy and functionality can be challenging. That’s where AI for Businesses comes in. This curated platform (https://aiforbusinesses.com) is designed to guide SMEs through the selection process, offering a comprehensive directory of AI tools. From platforms like Writesonic to Stability.ai, the site helps businesses identify solutions that align with their privacy and sentiment analysis goals.

Conclusion: Using Technology While Protecting Privacy

Sentiment tracking offers a way to gather valuable insights while respecting individual privacy. In fact, research highlights that 90% of employees are open to allowing their employers to collect data about them - if they see a clear and tangible benefit in return. The foundation of this acceptance lies in building trust through transparency, obtaining meaningful consent, and ensuring mutual value.

Take the example of companies like Hitachi. By implementing sentiment tracking transparently, they saw measurable improvements in performance. This wasn’t about surveillance - it was about creating a system where both the organization and its employees could thrive. Striking this balance paves the way for responsible, data-driven decision-making.

Key practices include focusing on aggregated data, adopting opt-in policies, and ensuring human oversight remains part of the process.

"Monitoring, when done well, can provide valuable information for training and feedback... But all of that depends on a culture of respect and trust." – Tara Behrend, President of the Society for Industrial and Organizational Psychology

For small and medium-sized enterprises, tailored AI tools can make ethical implementation more accessible. Platforms like Writesonic and Stability.ai, which can be found through resources such as AI for Businesses, offer features like data anonymization, encryption, and aggregated reporting. These tools ensure that sentiment tracking fosters both employee well-being and business success.

The future of workplace sentiment tracking boils down to one key principle: "Trust is the outcome of high competence and the right intent". When organizations combine advanced technology with ethical oversight, they create an environment where operational performance and employee dignity can flourish side by side.

FAQs

How can companies respect employee privacy while using sentiment tracking tools?

Companies aiming to respect employee privacy need to prioritize transparency when using sentiment tracking tools. This means clearly communicating with employees about what data is being collected, how it will be used, and what safeguards are in place to protect their privacy. It's equally important to obtain explicit consent from employees before implementing such tools. Providing an option to opt out further reinforces trust and ensures alignment with workplace privacy standards.

What challenges might sentiment tracking create for neurodivergent employees?

Sentiment tracking tools are designed to analyze emotions and behaviors, but they often fail to consider the varied ways neurodivergent employees express themselves. This gap can result in inaccurate readings or unintended biases, which might misrepresent their emotional states or undervalue their contributions.

To tackle this issue, organizations need to prioritize inclusivity in the design of these tools and ensure they are complemented by human oversight. Pairing technology with a human touch can help avoid unfair outcomes. Additionally, being open about how data is collected and used can foster trust across the workforce.

How can businesses use sentiment tracking tools without compromising employee privacy?

Balancing sentiment tracking with employee privacy is a delicate task that demands careful planning and open communication. These tools can offer useful insights into team morale and overall performance, but if not handled properly, they can spark privacy concerns and damage trust among employees.

To approach this responsibly, businesses should prioritize transparency. Clearly explain how the data will be used and ensure it serves to benefit both the employees and the organization. It's also essential to avoid overstepping with excessive monitoring and to respect emotional boundaries. By doing so, companies can build trust and maintain a healthy workplace culture, gaining valuable insights without negatively impacting employee morale or well-being.