In a world where businesses share sensitive data daily, keeping that information secure is critical. AI is reshaping how companies protect data collaboration by offering tools that detect threats, safeguard privacy, and simplify compliance. Here's the key takeaway:

- AI improves data security by analyzing patterns, detecting unusual activity, and automating responses to potential breaches.

- Privacy-focused methods, like federated learning and encryption, ensure sensitive data is protected during collaboration.

- AI tools for SMEs provide enterprise-grade security without the need for large IT teams, making advanced protection accessible to smaller businesses.

AI's role in secure data sharing is growing, but it also introduces challenges like false positives and new attack methods. Balancing privacy, compliance, and security is essential for safe collaboration.

Secure Data and AI Collaboration with Databricks Clean Rooms

Research Findings on AI's Role in Data Security and Collaboration

Recent research highlights how AI is reshaping secure data sharing. It offers new possibilities for protection but also introduces unique challenges.

AI-Powered Threat Prevention and Detection

AI has shown it can detect and counter threats more efficiently than traditional methods. By processing massive amounts of real-time data, machine learning can spot subtle irregularities that might otherwise go unnoticed.

For example, AI systems can flag unusual user or network activity by comparing it to established behavioral baselines. Behavioral analytics are particularly useful in identifying insider threats or compromised accounts, as they learn typical user patterns and alert security teams when something seems off.

In response to potential threats, AI systems can take immediate action - like isolating users, quarantining suspicious files, or temporarily restricting data-sharing permissions until further investigation. This rapid response capability limits the time attackers have to exploit vulnerabilities and helps contain any potential damage.

Additionally, natural language processing (NLP) plays a crucial role in safeguarding sensitive information. By scanning documents, emails, and other communications, NLP tools can identify confidential data and ensure the proper security measures are in place.

Benefits and Limitations Comparison

Research has identified both the strengths and weaknesses of AI-driven security systems when it comes to secure collaboration:

| Benefits | Limitations |

|---|---|

| Enhanced Threat Detection: AI outperforms traditional rule-based methods in identifying threats. | False Positives: Excessive alerts from AI models can overwhelm security teams. |

| Rapid Response: Automation enables quick threat mitigation, reducing potential damage. | Data Dependence: High-quality, extensive datasets are essential for AI effectiveness. |

| Scalability: AI can handle growing data volumes without increasing staff needs. | Adversarial Vulnerabilities: Attackers may manipulate AI models using crafted inputs. |

| Cost Efficiency: Automating security tasks can lower operational costs. | Implementation Complexity: Deploying AI requires specialized expertise and ongoing maintenance. |

| Consistent Protection: Security policies can be applied uniformly across data-sharing activities. | Privacy Concerns: Continuous monitoring may raise employee privacy issues. |

| Adaptive Learning: AI evolves by learning from new threats, improving its detection capabilities over time. | Regulatory Uncertainty: Changing governance standards for AI security can complicate compliance. |

While AI significantly improves threat detection, it also introduces risks. For instance, attackers are now using AI techniques to bypass defenses, creating a new layer of complexity. This makes human oversight an essential component of any AI-driven security strategy. Combining AI's speed and ability to recognize patterns with human decision-making ensures better handling of complex security scenarios.

AI-powered security tools are especially advantageous for small and medium-sized businesses, as they provide strong protection without requiring large security teams. However, these organizations must carefully evaluate their needs and ensure they have the necessary resources for proper implementation and upkeep.

AI Technologies for Secure Data Collaboration

AI is now at the forefront of providing cutting-edge solutions to ensure secure and seamless data collaboration.

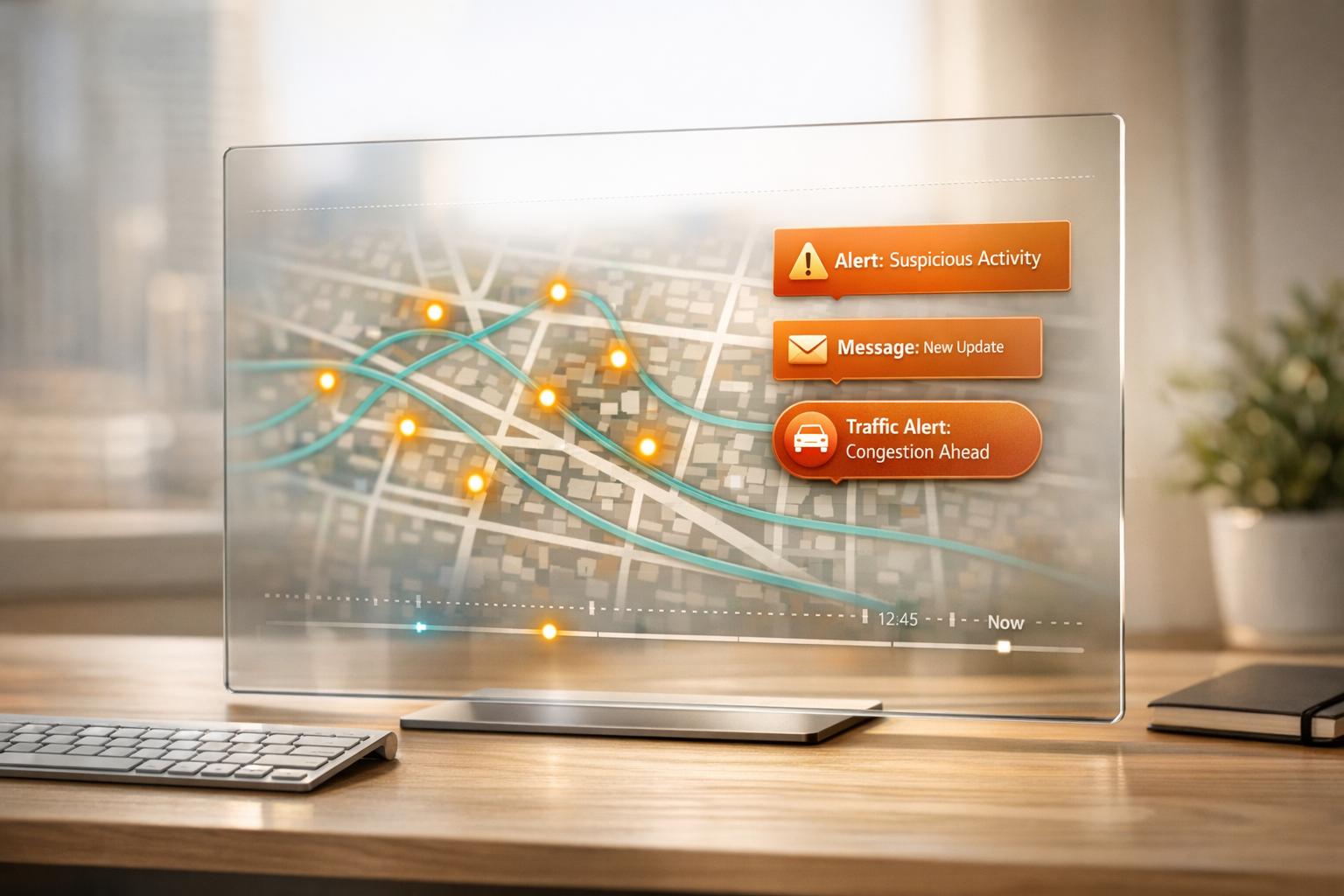

Automated Threat Detection and Real-Time Monitoring

AI-powered systems are constantly on guard, scanning for potential threats and vulnerabilities. By leveraging machine learning, these systems create behavior baselines, allowing them to identify unusual activities that might signal a breach. Deep learning models dive into network traffic to uncover unauthorized access or data theft attempts. For example, anomaly detection tools monitor login habits, file transfers, and access patterns, creating detailed user behavior profiles. If something seems off - like an unusual login time or a strange file transfer - it triggers pre-set security measures. Predictive analytics takes this a step further by anticipating potential risks before they materialize. In response, automated systems can isolate compromised accounts, adjust permissions, or quarantine threats to contain the damage. Beyond just spotting threats, AI also employs advanced techniques to maintain data integrity and privacy.

Privacy-Preserving Computation Methods

When it comes to protecting sensitive information, AI offers a range of advanced methods to ensure privacy during collaboration. Federated learning, for instance, allows organizations to train models together without sharing raw data - only the distilled parameters are exchanged. Homomorphic encryption enables computations on encrypted data, so sensitive information remains secure even during processing. Secure multi-party computation allows multiple parties to compute shared functions without revealing individual inputs, making it ideal for generating aggregated insights. Differential privacy protects individual data points by adding carefully calibrated noise, ensuring overall data accuracy while safeguarding personal details. Zero-knowledge proofs provide a way to verify certain information - like compliance or data quality - without exposing the underlying data itself. These methods collectively ensure that collaboration doesn’t come at the cost of privacy.

AI Tools for SMEs

Small and medium-sized enterprises (SMEs) can now access specialized AI tools to enhance data security while streamlining their operations. For instance, document security platforms automatically identify and protect sensitive files based on their content. AI-driven collaboration monitoring tools track how information flows across communication channels, ensuring confidential data stays protected. Access management systems take it further by continuously evaluating user permissions and suggesting updates based on changing roles or policies, helping SMEs maintain tight control over data access.

For businesses ready to adopt these solutions, platforms like AI for Businesses offer a range of tools tailored for SMEs and growing companies. Notable examples include Looka, which safeguards brand assets in collaborative marketing, Rezi, designed for secure HR document management, Stability.ai, which protects creative assets, and Writesonic, enabling secure content collaboration. These tools integrate seamlessly with existing systems through APIs, allowing businesses to enhance their security without disrupting current workflows. With scalable options, SMEs can start small and gradually expand their security measures as their needs evolve.

sbb-itb-bec6a7e

Best Practices for AI-Enabled Secure Data Collaboration

Implementing AI-driven security tools effectively is all about finding the right balance between strong protection and operational efficiency. For small and medium-sized enterprises (SMEs), having a clear plan in place is essential to get the most out of these tools while safeguarding sensitive data.

Risk Assessments and Data Classification

Before rolling out AI security tools, it’s crucial to classify your data based on sensitivity and map out how it moves through your organization. Start by categorizing data into groups like public, internal, confidential, and restricted. This helps define the level of protection and monitoring each type of data needs.

Regular risk assessments are key. Map out your data flows - from collection to storage, processing, and eventual deletion. This process highlights critical points where AI monitoring should focus. For example, customer payment data might require real-time AI-powered fraud detection, while internal communications may only need basic access controls.

Assigning numerical risk scores to different data types can also help. High-risk assets, like financial or health-related data, should be prioritized for advanced AI protection. This ensures your budget is spent where it matters most.

Don’t forget to test your defenses regularly. Conduct quarterly penetration tests to see if your AI systems can detect sophisticated attacks and whether your privacy-preserving methods are holding up during data collaboration.

Once risks are identified and data is classified, make privacy a fundamental part of every process.

Privacy-by-Design Principles

Building privacy into your systems from the start can strengthen security without introducing new vulnerabilities.

Choose AI tools that only process the data they absolutely need. For instance, you could monitor login patterns without storing personal details. This minimizes the risk of exposing sensitive information while still providing strong protection.

Transparency is another critical element. Clearly document how your AI tools collect, process, and store data. Outline policies that explain when alerts are triggered, how false positives are handled, and what information is retained. This kind of documentation is invaluable during audits and regulatory reviews, and it builds trust with both employees and customers.

Allow users to maintain control over their data. For example, if an AI system flags unusual activity, provide a way for users to appeal decisions or explain legitimate business activities that might seem suspicious.

Lastly, combine AI monitoring with end-to-end encryption. Modern AI tools can analyze metadata and behavioral patterns without accessing decrypted content, ensuring your data remains secure even during analysis.

Regulatory Compliance

AI security tools must be configured to meet U.S. data protection regulations, helping you avoid legal issues while maintaining robust security practices.

For example, compliance with the California Consumer Privacy Act (CCPA) requires AI systems capable of tracking data usage and responding to consumer requests. Deploy tools that automatically catalog personal data collection, processing, and sharing. These tools should also handle data deletion requests seamlessly, without needing manual intervention.

Different industries have their own regulations to consider. Healthcare organizations need AI systems that comply with HIPAA to protect patient information. Financial institutions must meet Gramm-Leach-Bliley Act requirements for customer data, while retailers handling payment data should use AI tools certified for PCI DSS compliance.

To meet these standards, ensure your AI systems generate audit trails that log data access, modifications, and sharing activities. The logs should provide enough detail for regulatory investigations without retaining unnecessary data that could pose additional risks.

Keep your compliance efforts up to date by selecting AI tools that automatically adjust to regulatory changes and notify administrators when updates are needed.

Finally, maintain thorough documentation of your AI systems, including configurations, decision-making algorithms, and privacy measures. Regulators are paying closer attention to how businesses use AI for data protection, so having clear records can demonstrate compliance and avoid penalties during investigations.

Challenges and Future Directions

AI is revolutionizing secure data collaboration, but it also brings new challenges. Businesses must stay ahead of emerging threats, adapt to shifting regulations, and manage the growing complexity of AI systems.

New Threats from Generative AI

Generative AI is opening the door to advanced cyberattacks that are harder to detect with traditional methods. Take deepfake technology, for example - it allows attackers to create highly convincing fake audio and video content, which can be used for social engineering. These synthetic media attacks can bypass voice authentication systems and trick employees into sharing sensitive information.

AI-powered phishing is another growing concern. Attackers now use advanced language models to craft personalized, convincing emails that mimic real writing styles and even reference specific company details. Unlike generic phishing attempts, these targeted attacks can slip past conventional defenses.

Then there’s adversarial machine learning, a tactic where cybercriminals intentionally feed misleading data into AI security systems. By exploiting their understanding of how these systems work, they create inputs designed to confuse and mislead AI tools.

To combat these risks, businesses need a multi-layered defense strategy. This includes combining AI detection tools with human oversight, retraining models to reflect the latest threats, and using behavioral analysis to identify unusual activity. Zero-trust architectures - which require ongoing verification for every access request - are also critical for minimizing the risks posed by AI-generated attacks. Navigating these evolving threats requires balancing data utility, user privacy, and compliance with regulations.

Balancing Data Utility, Privacy, and Compliance

Extracting insights from data while respecting privacy and regulatory requirements is a constant struggle. AI systems often need large datasets to function effectively, but privacy principles typically call for minimizing data collection to what’s strictly necessary.

Regulations add another layer of complexity. Businesses are expected to explain AI decisions, even when using black-box models that lack transparency. Add to this the challenges of navigating cross-border data transfers under varying international laws, and it’s clear why compliance can be so daunting.

To address these issues, businesses are turning to federated learning. This approach allows AI models to learn from decentralized data, eliminating the need to centralize sensitive information. It enables collaboration while keeping data securely within local boundaries.

Another promising solution is differential privacy. By introducing controlled noise into datasets, this technique allows AI systems to identify trends without compromising individual privacy. While it may slightly reduce data accuracy, it offers strong mathematical assurances that private information remains protected.

Industry Efforts for Standardization

The lack of standardized frameworks for AI security has led to inconsistent practices across industries. However, several initiatives aim to create unified guidelines to help businesses secure their AI systems.

For instance, the NIST AI Risk Management Framework offers a structured approach to identifying and managing AI-related risks throughout the system's lifecycle. It emphasizes continuous monitoring and regular assessments to ensure AI systems remain secure and effective.

Other industry efforts include sharing threat intelligence through consortiums, leveraging open-source tools like MITRE's ATLAS for vulnerability testing, and developing certifications to evaluate model robustness, data security, and explainability. These initiatives aim to simplify compliance across jurisdictions while accounting for regional differences.

Conclusion

Recent research and advancements in AI are paving the way for safer and more efficient data collaboration. AI is proving to be a powerful ally in protecting sensitive information through tools like threat detection systems, privacy-preserving computation, and automated monitoring. These technologies are transforming the way organizations share data, making it both safer and more seamless.

At the same time, generative AI introduces new challenges, such as novel attack methods and the ongoing struggle to balance privacy with data utility. Despite these hurdles, businesses that adopt AI security tools thoughtfully can reap significant benefits. These tools offer practical solutions that allow companies to collaborate securely while mitigating risks.

Takeaways for SMEs

Small and medium-sized enterprises (SMEs) are uniquely positioned to adopt AI for secure data collaboration, often without the complications of legacy systems that larger organizations face. The key is to focus on the basics and build gradually.

- Choose AI tools that integrate effortlessly with your existing cloud systems. Look for platforms that enhance security without overburdening your IT team. Many of these tools offer enterprise-level protection at a fraction of the cost, often including compliance features to help meet regulatory standards.

- Adopt privacy-by-design principles in all data collaboration projects. This means planning for data protection from the outset. Techniques like data minimization - sharing only what’s absolutely necessary - and clear data retention policies can go a long way in safeguarding sensitive information.

- Train your team to recognize AI-driven threats like deepfakes and advanced phishing attempts. AI-generated content can be highly convincing, so employees should verify unusual requests through multiple channels to avoid falling victim to scams.

For businesses looking to explore AI tools, platforms such as AI for Businesses provide curated solutions that streamline operations while maintaining robust security standards.

By taking these steps, SMEs can set the foundation for secure and agile data collaboration, ensuring they are well-prepared for the challenges ahead.

The Future of AI in Data Collaboration

Looking ahead, AI's role in data collaboration will continue to evolve, focusing on smarter access controls and more transparent decision-making processes. Zero-trust architectures powered by AI are expected to become more sophisticated, offering context-aware access controls that go beyond simple yes-or-no permissions. These systems will continuously evaluate risk based on factors like user behavior, data sensitivity, and the surrounding environment.

Efforts to standardize AI security practices, such as the NIST AI Risk Management Framework, will also mature, providing businesses with clearer guidelines and reducing the complexity of implementing security measures across different regions.

One of the most promising developments is explainable AI in security applications. These tools will offer greater transparency by explaining the reasoning behind security decisions, helping businesses make more informed choices about risk management.

For SMEs, the future holds exciting possibilities. Advanced security features will become increasingly accessible, eliminating the need for deep technical expertise. The focus won’t just be on creating more powerful tools - it will be about ensuring every business, regardless of size, can safely and confidently collaborate in an increasingly data-driven world.

FAQs

How can small businesses adopt AI security tools without needing a large IT team?

Small businesses can effectively integrate AI security tools by opting for simple, automated solutions that are easy to set up and maintain. Many of these tools come equipped with features like continuous monitoring and threat detection, helping to minimize the need for extensive IT resources.

To get started, focus on pinpointing your specific security requirements and educating your team on basic AI-driven security protocols. Keeping systems updated and patched is another key step to safeguard against potential vulnerabilities. Working with vendors who offer straightforward, quick-to-deploy solutions can make the process even smoother, enabling small businesses to strengthen their security without relying on a large IT staff.

What are the risks of using AI in data security, and how can businesses address them?

AI brings with it some serious data security challenges, including data breaches, unauthorized access, algorithmic bias, adversarial attacks, and regulatory non-compliance. These issues can lead to financial setbacks, tarnished reputations, and even legal troubles.

To address these concerns, businesses need to prioritize strong security practices. This means implementing AI-specific governance policies, conducting thorough risk assessments, and using frameworks like the NIST AI Risk Management Framework. Additional steps - such as tracking the origin of data, securing supply chains, and developing an AI Bill of Materials - can further reinforce safe and responsible AI usage.

How do technologies like federated learning and homomorphic encryption improve secure data sharing?

Technologies like federated learning and homomorphic encryption are game-changers when it comes to secure data sharing, offering robust ways to protect sensitive information during collaboration.

With federated learning, organizations can train AI models across multiple devices or systems without ever moving raw data. Instead of sharing actual data, only the model updates are exchanged. This keeps private information stored locally, significantly reducing the risk of exposure or breaches.

On the other hand, homomorphic encryption takes security a step further by allowing computations to be performed directly on encrypted data. The data remains encrypted throughout the process, ensuring that only authorized users can decrypt and access the final results.

By combining these approaches, businesses can collaborate confidently, safeguarding data privacy while adhering to strict regulatory requirements.