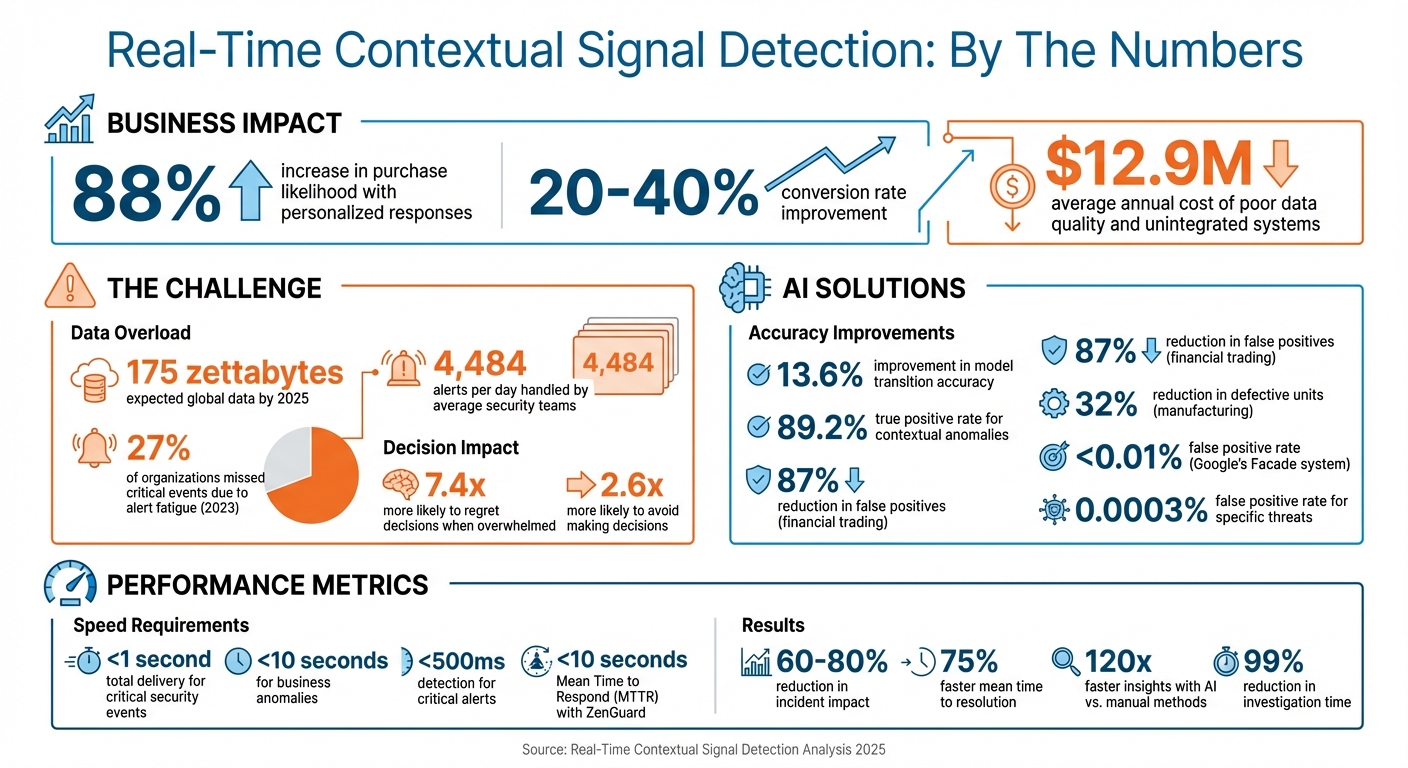

Real-time contextual signal detection helps businesses understand customer behavior instantly, making it possible to respond while intent is still strong. Unlike traditional analytics, which analyzes past data, real-time systems combine live actions (e.g., website visits) with historical data (e.g., purchase history) to deliver immediate insights. This approach boosts engagement, with personalized responses increasing purchase likelihood by up to 88% and conversions by 20–40%.

However, challenges like data overload, fragmented tools, and false positives complicate implementation. AI is addressing these issues by identifying patterns, correlating signals, and prioritizing alerts with high accuracy. Tools like Momentum, Vectra AI, and Gong are leading this space, offering real-time insights and reducing alert fatigue. To succeed, businesses must integrate systems that ensure sub-second detection and response times while maintaining data quality.

Key takeaways:

- Real-time analysis drives faster, more relevant customer interactions.

- AI tools reduce false positives and improve detection accuracy.

- Measuring metrics like MTTD (Mean Time to Detect) and MTTR (Mean Time to Respond) ensures system performance.

Start small with pilot programs, prioritize actionable data, and optimize systems for speed and accuracy to stay competitive.

Real-Time Contextual Signal Detection: Key Statistics and Impact Metrics

AI Makes Contextual Signals More Relevant for Marketers: Digitas’ Greg Campbell

Challenges in Real-Time Contextual Signal Detection

Detecting contextual signals in real time comes with its fair share of hurdles, often complicating the ability to respond swiftly and effectively.

Data Overload and Alert Fatigue

The sheer volume of data being generated is staggering. By 2025, the global data-sphere is expected to reach 175 zettabytes. Yet, despite this abundance, 27% of organizations in 2023 reported missing critical security events due to alert fatigue. This phenomenon occurs when teams, overwhelmed by a constant barrage of notifications, start tuning them out. Cybersecurity expert Tony Gonzalez aptly describes the situation:

"We have plenty of data but not much information"

This overload doesn't just lead to missed alerts - it also impacts decision-making. Overwhelmed leaders are 7.4 times more likely to regret their decisions and 2.6 times more likely to avoid making decisions altogether.

Part of the problem stems from what’s been dubbed "Shiny Bubble Syndrome." Businesses often rush to adopt the latest tools but lack the expertise or resources to effectively analyze the data these tools generate. Instead of focusing on metrics that align with strategic objectives, they attempt to measure everything, leading to what experts call "information paralysis". This chaos sets the stage for further complications in data integration and analysis.

Siloed Data and Tool Fragmentation

Disconnected systems are another major obstacle, creating blind spots that can obscure critical signals. When endpoint tools, network monitors, identity systems, and cloud platforms operate independently, analysts are forced to manually piece together logs from various dashboards. This slows down the process of identifying and addressing incidents. The financial impact is significant - poor data quality and unintegrated systems cost organizations an average of $12.9 million annually.

But the issue isn’t just about separate dashboards. Context matters. Raw data alone isn’t enough; it needs to be paired with the logic that interprets it. As Fakepixels explains:

"Exporting data without its analytical context loses critical interpretative insights"

Adding to the challenge, many organizations still rely on outdated batch processing methods instead of real-time data integration. This means the data they’re working with is often outdated by the time it’s analyzed. On top of that, juggling multiple platforms forces analysts to switch between tools constantly, which leads to fragmented focus, increased operational stress, and extended investigation times. This fragmentation not only delays responses but also increases the risk of misinterpreting important signals.

False Positives and Delayed Responses

False alarms are another thorn in the side of real-time detection efforts. Alerts that lack context - such as information about recent system changes, previous incidents, or affected services - end up wasting valuable resources. Without this enrichment, teams struggle to determine which signals are truly critical.

In high-stakes scenarios, speed is everything. For critical security events, the industry standard for total end-to-end delivery (detection, processing, and delivery) is under 1 second. For business anomalies, the window is slightly larger - less than 10 seconds - to ensure the data remains actionable. Organizations that implement effective real-time alerting systems often see a 60–80% reduction in the impact of incidents and a 75% faster mean time to resolution. Mohtasham Sayeed Mohiuddin, Associate Solutions Architect at Confluent, highlights the broader implications:

"The difference between immediate detection and delayed response isn't just technical - it also includes financial losses, regulatory fines, and reputational damage"

Reducing false positives is essential, and AI-driven systems are stepping up to refine alert accuracy and accelerate response times.

How AI Improves Contextual Signal Detection

AI is transforming how systems interpret and react to data, moving away from outdated, static thresholds. Instead, it creates dynamic baselines by continuously comparing live data to historical trends - like annual spending patterns or typical network activity - allowing systems to adapt to changing conditions.

Pattern Recognition and Behavioral Baselines

AI doesn't just monitor thresholds; it uses logical detectors to track variables like weather, local events, or crowd density. When these detectors spot an anomaly, or "shock", that surpasses a set threshold, the system initiates a regime switch - shifting to a behavioral model that better matches the current context. For instance, AI employs Finite Mixture Models (FMMs) to represent complex behaviors as a blend of distinct patterns, such as "holiday season" versus "off-season." This enables the system to identify which behavioral mode is active based on real-time data.

This method has shown tangible results: a 13.6% improvement in model transition accuracy and an 89.2% true positive rate for detecting contextual anomalies. In manufacturing, AI-driven pattern detection cut defective units by 32%, while in financial trading, anomaly detection reduced false positives by 87%. Additionally, these systems perform real-time feature engineering with millisecond-level latency, comparing incoming data against historical patterns instantly.

Such precise detection capabilities pave the way for more advanced, multi-dimensional analyses.

Signal Correlation and Prioritization

Once patterns are recognized, AI takes detection further by correlating multiple data dimensions. It processes thousands of data points simultaneously, identifying clusters of related anomalies rather than isolating individual deviations. This approach helps pinpoint root causes and significantly reduces alert fatigue. For example, Google's "Facade" system, an unsupervised AI tool developed by Alex Kantchelian and Casper Neo, uses contrastive learning and clustering to analyze document access, SQL queries, and HTTP/RPC logs. Since 2018, it has maintained a false positive rate below 0.01% across Google's vast infrastructure. For specific threats, like unauthorized access to sensitive documents, that rate drops to 0.0003%.

AI also integrates context-aware risk scoring early in the detection process. Tools like Google Security Operations enrich detection workflows with data from EDR, firewalls, and IAM systems to calculate heuristic risk scores in real time. The ZenGuard framework, which combines UEBA and SOAR features, achieved a Mean Time to Respond (MTTR) of less than 10 seconds for threats like privilege escalation and lateral movement. Analysts can fine-tune sensitivity thresholds - Low, Medium, or High Confidence - to strike the right balance between catching real threats and reducing false alarms.

sbb-itb-bec6a7e

AI Tools for Real-Time Contextual Signal Detection

AI's ability to detect errors and recognize patterns has evolved to include tools capable of providing real-time contextual analysis.

Top Tools for Contextual Signal Analysis

Small and medium-sized businesses (SMBs) are increasingly turning to AI platforms to identify real-time signals. Momentum, for example, automates sales workflows by spotting tone shifts or missed follow-ups, triggering actions in systems like Slack and Salesforce. Back in 2025, companies such as Ramp, 1Password, and Demandbase reported speeding up their deal cycles by 2–3 times using Momentum’s automated risk alerts and CRM updates.

Vectra AI employs "Attack Signal Intelligence" to distinguish typical activity from actual threats, correlating behaviors across domains. This method significantly reduces the volume of alerts, enhances accuracy, and cuts down assessment time.

Meanwhile, tools like Avina monitor publicly available information - including earnings calls, 10-K filings, and product updates - to uncover subtle buying triggers tailored to specific customer profiles. Similarly, Gong analyzes buyer conversations with over 300 AI signal types, identifying sentiment changes, objections, and mentions of competitors. For mobile app developers, ContextSDK evaluates over 300 on-device signals - such as motion, battery life, and ambient light - to interpret user intent while maintaining privacy.

AI Tool Features and Pricing Comparison

When choosing a tool, both features and pricing are critical considerations. Pricing structures often depend on use cases and business size. For example, Gong typically costs between $1,200 and $1,500 per user annually for enterprise-level access, while Clari starts at around $60,000 per year. Many platforms, including Momentum, People.ai, and 6sense, offer custom pricing based on a company’s specific needs.

The AI for Businesses directory provides a curated list of real-time contextual signal detection tools, helping SMBs find solutions that align with their technical requirements and budgets. When evaluating tools, focus on those offering sub-second latency (ideally under 500ms for critical actions), seamless CRM and analytics platform integration, and privacy-first designs that comply with GDPR and CCPA standards.

Implementing Real-Time Signal Detection Solutions

Steps for Integration

To set up real-time signal detection, start by configuring event stream processing tools like Kafka, ensuring proper use of window functions and state management. From there, deploy a mix of alerting patterns: threshold-based alerts for known issues, anomaly detection through statistical or machine learning models for unknown risks, and composite event alerts to correlate multiple signals. Add key metadata - like hostname, cluster ID, and service owner - to each alert for clarity and faster decision-making.

To maintain accuracy, enforce data governance by using schema validation. This ensures alerts are only triggered when data quality is high, reducing false positives. Finally, link detection signals to automated actions, such as activating circuit breakers or locking accounts. Integrate these signals with enterprise tools like ServiceNow or Slack to automatically create incidents and streamline workflows.

Organizations that adopt real-time alerting often see a 60-80% reduction in incident impact and achieve a 75% faster mean time to resolution. AI-powered insights can further accelerate this process, delivering answers up to 120 times faster than manual methods and cutting investigation time by 99%.

Measuring Success with MTTD and MTTR

After integrating your detection system, it’s crucial to measure its performance. Two key metrics to track are Mean Time to Detect (MTTD), which shows how quickly you identify an incident, and Mean Time to Respond (MTTR), which measures the time it takes to fully resolve it. Monitoring these metrics helps identify trends and evaluate your tools' effectiveness.

For example, critical security alerts should be detected within 500 milliseconds, processed in under 200 milliseconds, and delivered in less than 300 milliseconds - keeping the total time under one second. For system outages, detection and delivery should happen within two seconds.

To refine these metrics, segment MTTD and MTTR by incident type, severity, and detection method. This helps uncover areas for improvement. Additionally, track your alert-to-incident ratio, which measures the proportion of alerts that turn into confirmed incidents. A lower ratio indicates better noise reduction. Faster MTTD also minimizes "dwell time", reducing the opportunity for attackers to move laterally or steal data.

Reducing Noise and Improving Accuracy

To enhance detection accuracy, use heuristic-driven risk scoring at the point of detection. This allows for quick prioritization based on the importance of the affected entity, rather than waiting for human triage. Exclude low-risk threats from expected environments, like malware tests in sandbox systems or non-sensitive development networks, to avoid unnecessary alerts. AI can also automate initial context gathering and deduplication, cutting investigation time from 15-20 minutes per alert to just 3-4 minutes. As Sricharan Sridhar, Cyber Defense Lead at Abnormal, puts it:

"We are not replacing the analyst. We are replacing the toil and elevating the expertise".

Dynamic thresholds, powered by machine learning, can establish baseline patterns of normal behavior and adjust sensitivity levels (Low, Medium, High Confidence) to balance detection accuracy with false positives. Techniques like trigger throttling and rolling windows can cap alert frequency while ensuring critical threats aren’t missed. Since 60-70% of alerts in security operations centers are typically benign, continuously reanalyzing models with fresh data is vital for maintaining precision.

Conclusion: The Future of Contextual Signal Detection

The shift toward proactive signal detection is reshaping how businesses handle security and data analysis. On average, security teams deal with an overwhelming 4,484 alerts per day, with 67% of these alerts being ignored due to false positives and alert fatigue. The companies that succeed will adopt high-context AI systems - tools that don’t just flag when data changes but explain why those changes happen.

Agentic AI workflows are paving the way for more autonomous systems. These workflows allow AI agents to monitor, correlate, and act on signals without human intervention. By integrating structured data with unstructured inputs like earnings calls or social media sentiment, these systems can deliver insights in milliseconds. As Marina Danilevsky, Senior Research Scientist at IBM, explains:

"The most successful deployments are focused, scoped and address a pain point".

To stay ahead, businesses should begin by testing these workflows in controlled environments before scaling them up. Prioritizing data quality over sheer volume is key - context-rich data streams reduce the risk of AI "hallucinations" and unnecessary computational strain. Additionally, infrastructure must be optimized to handle unstructured data processing at high speeds.

Transparency will be a cornerstone as these systems grow more advanced. Explainable AI (XAI) is essential for building trust and ensuring fairness in how contextual signals are interpreted. With 97% of brands planning to increase their AI budgets over the next five years, the real challenge lies in how quickly these tools can be implemented to maintain a competitive edge.

The future belongs to organizations that can seamlessly combine speed with context, automation with transparency, and cutting-edge technology with human expertise. Start small - pilot programs are a great way to measure impact using metrics like MTTD (Mean Time to Detect) and MTTR (Mean Time to Respond). Use these real-world results to refine your systems continually. By improving these detection metrics, businesses can unlock the full potential of real-time contextual signal analysis and solidify their strategic advantage.

FAQs

How does AI help minimize false positives in real-time signal detection?

AI reduces false positives in real-time signal detection by examining data within its context and spotting patterns that separate real threats from benign irregularities. By considering the environment where the data originates, AI makes sharper decisions and cuts down on unnecessary alerts.

This means businesses can concentrate on tackling real risks, freeing up time and resources that would otherwise be wasted chasing false alarms.

How can AI tools like Momentum and Vectra AI benefit businesses?

AI tools such as Momentum and Vectra AI enable businesses to detect and interpret real-time contextual signals, streamlining operations and enhancing decision-making. By taking over repetitive tasks and analyzing intricate data patterns, these tools allow businesses to adapt quickly to shifting circumstances and make more informed, data-backed decisions.

Incorporating AI solutions not only lightens the manual workload but also boosts productivity and supports growth that can scale with ease. They’ve become a key resource for businesses aiming to stay competitive in today's fast-paced environment.

How can businesses reduce data overload and avoid alert fatigue?

To tackle data overload and prevent alert fatigue, businesses can implement smart, context-aware alerting systems that zero in on critical events while filtering out the noise. These systems work by analyzing data in real time, spotting important patterns - like suspicious transactions or equipment malfunctions - so teams can act swiftly without being bogged down by irrelevant notifications.

Establishing clear thresholds for alerts, based on their priority and urgency, ensures companies are only notified about events that truly matter. Regularly fine-tuning these settings allows organizations to stay responsive to changing conditions, making operations smoother and decisions sharper. Incorporating AI-powered tools that process data within its context takes this a step further, highlighting actionable insights, cutting through the clutter, and strengthening overall operational efficiency.